Generative AI

Generative AI has reached a new level in recent years: it is no longer just an assistant for text, but can be used as a productive component in development and analysis processes. In digitalization projects, this creates clear added value – for example through faster evaluation, better documentation, structured knowledge preparation, and more efficient collaboration between business units, IT and operations.

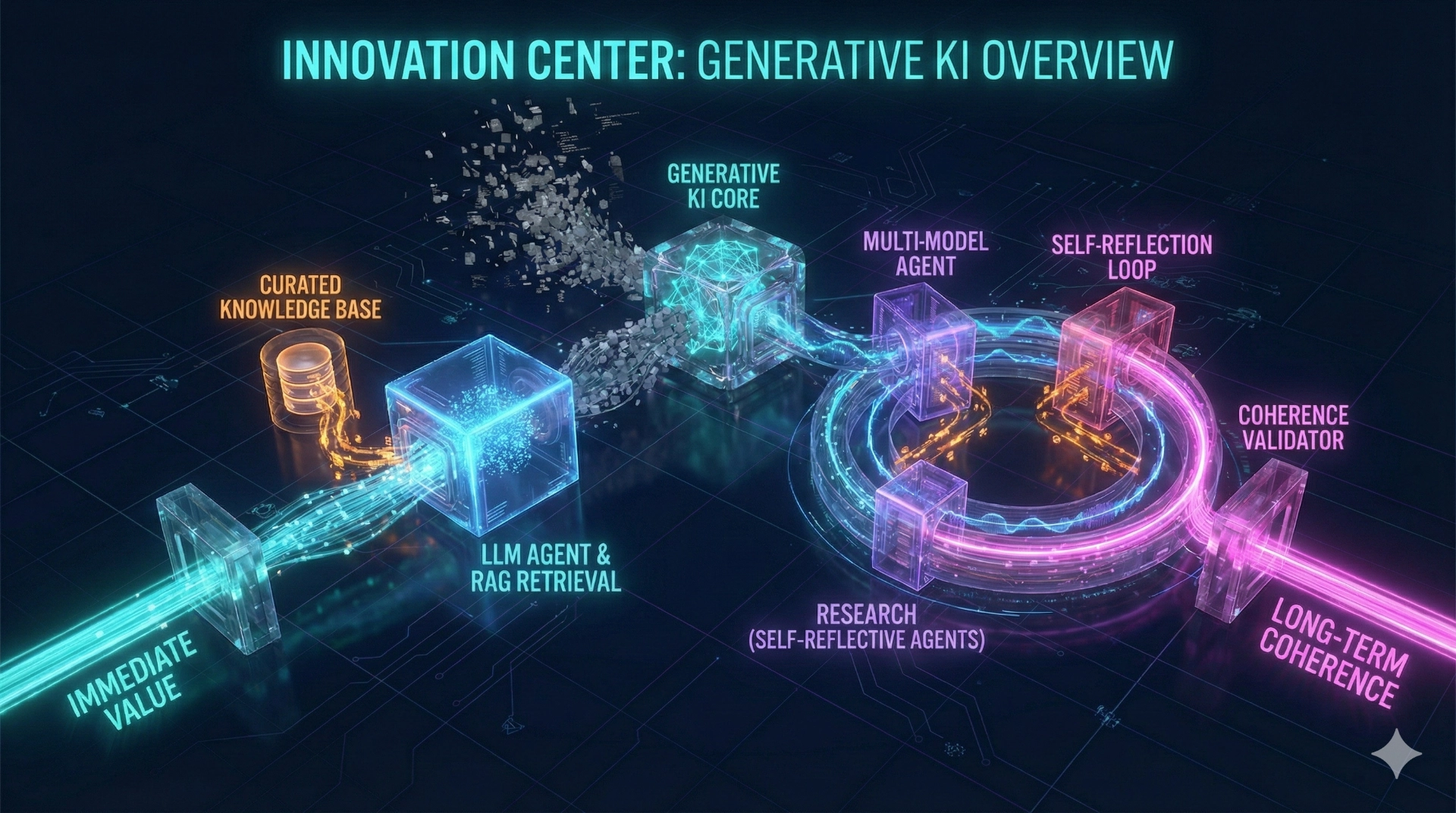

At the Innovation Center, we focus on two closely connected areas that are designed to work coherently in the long term, correct themselves, and validate results across multiple instances.

Development: Agent technology & Retrieval-Augmented Generation (RAG) for robust solutions in digitalization projects

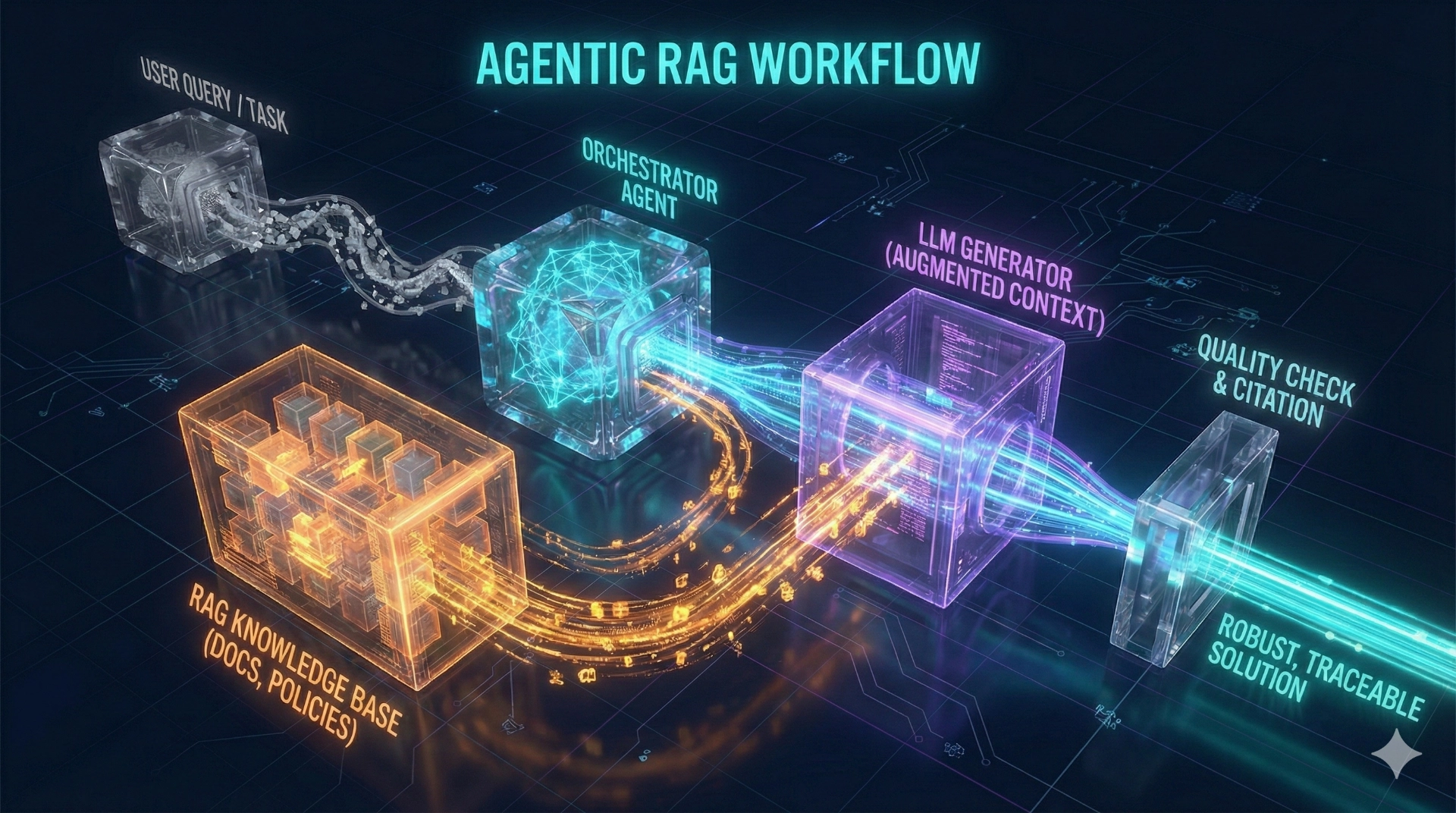

In practice, “an AI that answers questions” is rarely enough. What really matters is that generative AI is integrated into real processes – for example in analysis, support, reporting, decision support and documentation.

To achieve this, we use Large Language Models (LLMs) that are organized in agent workflows: tasks are broken down into traceable subtasks, results are checked, open issues are flagged and documented.

A central building block is Retrieval-Augmented Generation (RAG): before an answer is generated, relevant content is retrieved from curated sources – for example technical documentation, policies, process descriptions, support tickets or customer-specific knowledge. This increases domain fidelity and traceability and reduces typical risks of generative systems.

Value in the project context

- Turn knowledge into a productive asset: Organizational knowledge becomes discoverable, maintainable and usable in reports and assistant functions.

- Increase quality: Answers are grounded in provided sources instead of pure model knowledge – hallucinations are significantly reduced.

- Accelerate workflows: Documentation and analysis become faster and more consistent, and decisions can be better justified.

Research: Self-reflective multi-model agent systems for long-term coherence and robustness

In complex scenarios – such as large-scale software development, multi-stage analysis pipelines or demanding decision systems – today’s agent systems reach their limits. Typical issues include context drift, inconsistent decisions over time and fragile chains of reasoning.

That is why we are developing a research platform for cooperating AI instances that improve quality not only through speed, but through self-correction, cross-validation and long-term coherence. The core idea: instead of a single instance “doing everything”, specialized agents collaborate – and systematically check each other’s work.

Research focus areas

- Self-reflection & deliberative reasoning: Assumptions are made explicit, counter-hypotheses are tested and weaknesses are surfaced.

- Cross-validation between multiple instances: Results are assessed independently, and conflicts are exposed in a structured way.

- Cross-validation across different models: Different models compensate for each other’s bias profiles and error characteristics.

- Long-term context stability: Mechanisms for multi-layered contexts, work states and structured memory components.

This research is especially relevant in domains with high responsibility and regulation – wherever traceability, auditability, data sovereignty and controlled decision processes are mandatory. At the same time, it adds value across industries wherever complex decisions must remain consistent and verifiable over long periods.